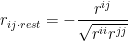

In order to validate multicollinearity in multivariate analysis, you could investigate signs of partial correlation matrix. You could calculate partial correlation coefficient, rij rest, when you would be given covariates without xi and xj and it’s assumed that R = (rij) as correlation matrix and R-1 = (rij) as inverse matrix, respectively.

Reverse the sign of the elements divided by square of products of the diagonal elements, they are partial correlation coefficients. The set of partial correlation coefficients is partial correlation matrix.

When the signs didn’t match between correlation matrix and partial correlation matrix, it suggests multicollinearity. When there was linear relationship between covariates, inverse matrix of correlation matrix could not be obtained.

You could get partial correlation matrix as below. It’s assumed that you have already get correlation matrix.

- Get inverse matrix of correlation matrix

- Divide each elements of inverse matrix by square of product of diagonal elements and reverse the sign

| |

A |

B |

C |

| 1 |

1.000 |

0.800 |

0.300 |

| 2 |

0.800 |

1.000 |

-0.700 |

| 3 |

0.300 |

-0.700 |

1.000 |

1. Get inverse matrix of correlation matrix

Excel has worksheet function to get inverse matrix. You need to press the Control key, Shift key and Enter key at the same time when you confirm the argument as MINVERSE function.

{=MINVERS($A$1:$C$3)}

| |

A |

B |

C |

| 5 |

-0.197 |

1.817 |

1.547 |

| 6 |

1.817 |

-1.637 |

-1.691 |

| 7 |

1.547 |

-1.691 |

-0.647 |

2. Divide each elements of inverse matrix by square of product of diagonal elements and reverse the sign

You would have to use INDEX function, ROW function and COLUMN function. Paste following formula to the corresponding cells. The number subtracted from the return of ROW function (and COLUMN function) would change depending on the situation.

=-INDEX($A$5:$C$7, ROW()-8,COLUMN())/SQRT(INDEX($A$5:$C$7, ROW()-8, ROW()-8)*INDEX($A$5:$C$7, COLUMN(),COLUMN()))

| |

A |

B |

C |

| 9 |

1.000 |

-1.483 |

-2.007 |

| 10 |

-1.483 |

1.000 |

1.642 |

| 11 |

-2.007 |

1.642 |

1.000 |

の偏導関数は次式で定義されます.

と記述し,一方 x を定数とした y に対する f の通常の導関数は

と記述します.

の全微分は次のように定義します.